Myth 1: “Because RED shoots RAW, you always have to transcode RED footage, and we don’t have the budget for that.”

This is the old saw of line producers beset by ever-shrinking budgets and schedules. While it’s true that RAW files require transcoding to play nicely with post environments that have standardized on a traditional video codec, such as DNx, ProRes, or the XDCAM50 codec (which is still widely used in news and sports broadcasting), RED is by no means the only RAW-capable camera that falls into this category. Transcoding to create dailies or edit media in traditional video codecs is required by a wide range of camera systems and isn’t limited to cameras that shoot RAW. The same rules apply when creating H.264 media for review on mobile devices. However, if one isn't bound by the policies of a particular studio, post house or broadcaster, consider that it can often be advantageous to “stay in the RAW” until the final export, avoiding generation loss and maximizing image data for color grading and VFX. Ultimately, though, this point is entirely moot for those with access to the latest bodies from RED’s DSMC2 line of cameras, which have supported simultaneous REDCODE RAW + ProRes (or DNxHD/HR) recording for more than two years.

Myth 2: “REDs only shoot RAW, and RAW data is HUGE! We’ll have to buy tons of expensive storage to handle all this data!”

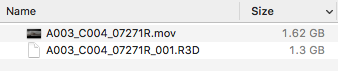

As mentioned above, DSMC2 bodies all can record in ProRes or Avid DNx in addition to (or instead of) RED's R3D RAW format. But the surprising truth is that these traditional, compressed video files can often be larger than the R3D RAW versions. The screenshot below shows an R3D file and a ProRes version of the same frame range (created by transcoding the R3D after off-loading the REDmag).

The original 15 sec. R3D RAW file came in at 1.3GB (6K, REDCODE 8:1 compression, 23.98 fps).

The original 15 sec. R3D RAW file came in at 1.3GB (6K, REDCODE 8:1 compression, 23.98 fps).

A 4K QuickTime ProRes4444 file made from it was nearly 20% larger.

Myth 3: “You need a very, very expensive computer to work on RED footage. Editing RED files on a laptop? Forget about it!”

The current and previous generation Mac and PC laptops are nothing like the laptops of even 5 years ago. Their performance characteristics are competitive with desktop systems, and the advent of eGPUs—external PCI card chassis that can host powerful graphics cards—mean laptops can provide truly outstanding results in a portable package. And, by dialing back the RAW decoding and display resolution in, for example, Adobe Premiere (called De-Bayer and Mercury Engine Playback resolution in Adobe Premiere Pro), you can usually find a reasonable balance between real-time playback and image quality during editing. Also, Premiere Pro will automatically scale the quality up to full when the image is paused. Similar functionality is available in DaVinci Resolve and Final Cut Pro X. For those on the desktop PC side, the performance level of commodity PC hardware has never been higher. Machines that can be assembled for $1000 - $1500 can easily outperform a late-2013 Mac Pro “Trashcan.”

Myth 4: “8K?! That’s ridiculous. Who needs that high of a pixel count? They’re just doing it for bragging rights.”

This one sounds plausible on its face; who could practically utilize so much resolution? More to the point, who would be able to see it (8K displays being somewhat rarer than hen's teeth at the present time)? The answer lies in how single-chip cameras make color. They (pretty much) all use an array of colored filters placed over the underlying grid of photosites. Often called the "Bayer Pattern," after Kodak color scientist Bryce Bayer, the array passes the light collected by each photosite through a red, green or blue filter. Therefore, no individual photosite sees more than a single primary color of light. The original approach for this scheme was to merge the data from a quadrant of adjacent photosites into a single RGB value. Since the resulting "child" pixel's "ancestors" had seen all three colors of light, a low-error, full-color (4:4:4) value could be derived for each resulting image pixel (at the cost of 75% of the sensor's spatial resolution). Many 1st- and 2nd-generation digital cinema cameras (such as the Canon C100, C300 and Sony FS700) used a 4K sensor in this way to produce very nice HD-resolution images. Of course, color can be interpolated in other clever ways that sacrifice far less resolution, but it’s not really possible to capture non-subsampled color from a single-chip sensor with a primary filter array at full resolution (aka “1:1”) on the sensor. If you consider that we're moving into a world where 4K deliverables will become the default for most projects, RED's 8K sensors start to make a lot of sense.

Myth 5: “Nothing else writes the R3D file format except the camera. How can I create trimmed clips without leaving the RAW domain and recompressing in another codec like ProRes?”

Both RedCineX-Pro and DaVinci Resolve will export trimmed R3D files without recompressing. The wavelet-based codec used by RED’s RAW files is completely intra-frame, meaning that there is no compression dependency on preceding or subsequent frames in a file. Also, both applications mentioned above are available for the low, low price of… FREE!